XFA/Data Handling Model: Difference between revisions

(write up a transfer, outline the remainder) |

(→Recovery: new section) |

||

| Line 40: | Line 40: | ||

* The filter produces a final bytestream, containing one debit for each credit applied to its source bytestream; it records the corresponding byteranges in the index. | * The filter produces a final bytestream, containing one debit for each credit applied to its source bytestream; it records the corresponding byteranges in the index. | ||

* The destination element divides its bytestream into a number of equal-sized device blocks, and writes it to permanent storage. Note that the final block is shorter than the rest, representing an EOF. See the [[Device API]] for details. | * The destination element divides its bytestream into a number of equal-sized device blocks, and writes it to permanent storage. Note that the final block is shorter than the rest, representing an EOF. See the [[Device API]] for details. | ||

The result is the following: | |||

* A mapping of collections to byteranges (from the application) | |||

* For each filter, a mapping of byteranges in the source bytestream to credits, and debits to byteranges in the target bytestream | |||

* A mapping of byteranges to tape blocks | |||

* A tape file full of data (possibly split -- we don't address that possibility here) | |||

All of the mappings relate integers (credits or debits) to byteranges (represented as an inclusive interval [min, max]). Such a mapping can be stored in an RDBMS efficiently, using ''O(n)'' space, where ''n'' is the number of credits or debits, not the length of the bytestream. | |||

== Recovery == | |||

Given the above mappings and a tape full of data, we can perform a recovery. | |||

[[Image:XFA-recovery-seekable.png|center|A normal recovery, with seekable filters.]] | |||

In this diagram, the pink represents the data used for recovery. The blue rectangles are data that are thrown out at each stage of the recovery. | |||

Before the recovery can begin, Amanda does some calculations. Based on the user objects to be recovered, Amanda calculates the collections required; in this diagram, that is collections 4 and 5. Amanda converts these collections into a byterange using the collection -> byterange mapping. Looking at the first filter, Amanda selects the set of credits that will completely cover that byterange, possibly including some extra data; in this case, credits 2 and 3 are needed. Amanda converts the equivalent debits into byteranges in the next bytestream, and repeats the process with the next filter; in this case Amanda needs credits 1, 2, and 3 from the second filter. Finally, Amanda uses the bytestream -> tape blocks mapping to determine the data blocks that must be read. | |||

Now, working bottom to top, Amanda reads the appropriate blocks from tape, discards a bit of data (blue) to get to the beginning of credit 1 for the second (bottom) filter, and passes the data to the filter, which operates in reverse. The filter produces another bytestream, from which Amanda must discard some initial and final data, and which is then passed to the first filter. Data in that filter's output is again discarded, leaving only the portions of the bytestream corresponding to collections 4 and 5, which the application uses to recover the requested user objects. | |||

If the required set of collections is not contiguous -- for example, if the user requested one user object near the beginning of the dump and one near the end -- then Amanda has two options. It can restore an entire range of collections including the required collections, and throw out those that are not required; or it can split the recovery into multiple transfers, one transfer for each contiguous set of collections. In practice, the decision of when to split a recovery will be based on heuristics balancing the time required to read some extra collections against the time required to perform multiple transfers. | |||

= Complications = | = Complications = | ||

Revision as of 15:13, 1 October 2007

Introduction

Amanda's data-handling model sits at the core of the application. Its purpose is fairly simple: move data between a backup client and archival storage. However, it is subject to some stringent constraints.

- Tapes are linear-access devices, with optimal block- and file-sizes; performance deteriorates rapidly as parameters diverge from these optima.

- Recovery of single files (or "user objects", as described below) should be relatively quick, and not require reading an entire dumpfile.

- Recovery should be possible with only native tools and the contents of tapes.

- Amanda must be able to recover indexes and all other metadata from the on-tape data alone (a "reindex" operation).

Terminology

Much of this terminology is part of the the Application API; this section briefly summarizes those terms..

- Application

- An Amanda component that interfaces directly with the client data.

- User Object

- The smallest object that can be restored (e.g., a file for GNU Tar).

- Collection

- The smallest amount of data that the application can operate on (e.g., a 512-byte block for GNU Tar).

- Transfer

- A data-movement operation.

- Transfer Element

- A component of a transfer; elements are combined in a kind of pipeline, with each element sending data to the next.

- Filter

- A transfer element which performs some transformation, such as compression or encryption, on the data that passes through it. Filters are described as operating normally when performing a backup, and in reverse on restore. When operating in reverse, a filter transforms data that it produced during normal operation, transforming it back into the original input. For example, an encryption filter encrypts in normal operation, and decrypts in reverse.

- Seekable Filter

- A filter which, when operating in reverse, can begin at arbitrary points in the datastream; contrast non-seekable filters.

- Non-seekable Filter

- A filter which, when operating in reverse, must always begin at byte zero of the datastream.

- Catenary Filter

- A filter for which concatenation is distributive over filtering. A filter is catenary if

cat file1 file2 | filter | filter -reverse

produces the same output as

(filter <file1 ; filter <file2) | filter -reverse

Gzip, for example is catenary.

- Bytestream

- A linear sequence of bytes; the data exchanged between transfer elements.

- Debit

- The creation of a range of bytes in a bytestream by a transfer element (we try to avoid the terms "block" or "chunk" here, although the effect is similar).

- Credit

- The consumption of a range of bytes from a bytestream by a transfer element.

Diagrams

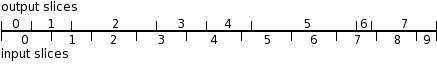

A bytestream is represented by a solid horizontal line; debits made by the transfer element producing the bytestream are indicated with tickmarks above the line, while credits for the consuming element are delimited with tickmarks below the line.

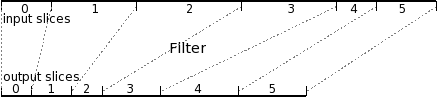

A filter element, then, consumes one bytestream (the upper bytestream) and produces another (the lower). Note that there is a one-to-one correspondence of an element's credits (against its source bytestream) and debits (to the bytestream it produces).

A Transfer

Let's look at a full transfer, operating normally:

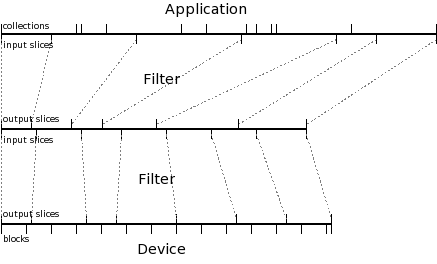

Reading the diagram from top to bottom:

- The application produces a single bytestream, recording (in the index) the byteranges representing each collection.

- The first (topmost) filter divides that bytestream up into credits, and records the corresponding byteranges in the index.

- The filter produces a different bytestream, containing one debit for each credit applied to the first bytestream; the byteranges of the debits are also recorded in the index.

- The second filter independently divides that bytestream up into credits, and records the corresponding byteranges in the index.

- The filter produces a final bytestream, containing one debit for each credit applied to its source bytestream; it records the corresponding byteranges in the index.

- The destination element divides its bytestream into a number of equal-sized device blocks, and writes it to permanent storage. Note that the final block is shorter than the rest, representing an EOF. See the Device API for details.

The result is the following:

- A mapping of collections to byteranges (from the application)

- For each filter, a mapping of byteranges in the source bytestream to credits, and debits to byteranges in the target bytestream

- A mapping of byteranges to tape blocks

- A tape file full of data (possibly split -- we don't address that possibility here)

All of the mappings relate integers (credits or debits) to byteranges (represented as an inclusive interval [min, max]). Such a mapping can be stored in an RDBMS efficiently, using O(n) space, where n is the number of credits or debits, not the length of the bytestream.

Recovery

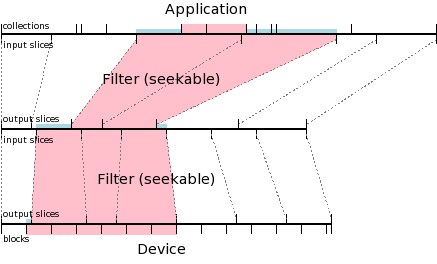

Given the above mappings and a tape full of data, we can perform a recovery.

In this diagram, the pink represents the data used for recovery. The blue rectangles are data that are thrown out at each stage of the recovery.

Before the recovery can begin, Amanda does some calculations. Based on the user objects to be recovered, Amanda calculates the collections required; in this diagram, that is collections 4 and 5. Amanda converts these collections into a byterange using the collection -> byterange mapping. Looking at the first filter, Amanda selects the set of credits that will completely cover that byterange, possibly including some extra data; in this case, credits 2 and 3 are needed. Amanda converts the equivalent debits into byteranges in the next bytestream, and repeats the process with the next filter; in this case Amanda needs credits 1, 2, and 3 from the second filter. Finally, Amanda uses the bytestream -> tape blocks mapping to determine the data blocks that must be read.

Now, working bottom to top, Amanda reads the appropriate blocks from tape, discards a bit of data (blue) to get to the beginning of credit 1 for the second (bottom) filter, and passes the data to the filter, which operates in reverse. The filter produces another bytestream, from which Amanda must discard some initial and final data, and which is then passed to the first filter. Data in that filter's output is again discarded, leaving only the portions of the bytestream corresponding to collections 4 and 5, which the application uses to recover the requested user objects.

If the required set of collections is not contiguous -- for example, if the user requested one user object near the beginning of the dump and one near the end -- then Amanda has two options. It can restore an entire range of collections including the required collections, and throw out those that are not required; or it can split the recovery into multiple transfers, one transfer for each contiguous set of collections. In practice, the decision of when to split a recovery will be based on heuristics balancing the time required to read some extra collections against the time required to perform multiple transfers.